The AI inflection point

Not since the arrival of the personal computer has the classroom witnessed a technology shift as consequential as artificial intelligence. From Seoul to Seattle, software that once quietly handled spell-checking is now writing feedback, adapting lesson plans on the fly, and spotting gaps in a learner’s mental model before they ossify. For educators already juggling overcrowded curricula and administrative overload, these systems promise super-powers: real-time insight into every learner’s progress and an automated assistant that never sleeps.

The acceleration is no accident. In May 2025 more than 200 U.S. CEOs—including Microsoft’s Satya Nadella and Uber’s Dara Khosrowshahi—signed a public letter urging states to make computer-science and AI literacy mandatory for high-school graduation【1】. Across the Pacific, China’s Ministry of Education announced a sweeping plan to weave AI into textbooks, teacher training, and national assessments to “maintain economic vitality through creative talent”【2】. Beneath the headline rhetoric lies a shared recognition: tomorrow’s citizens must become fluent not only in using AI, but in collaborating with it.

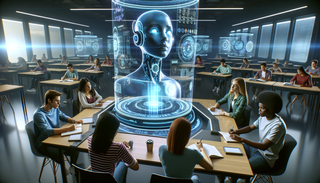

Inside the AI-enhanced classroom

What does an ordinary lesson period look like when algorithms join the roster?

-

Morning warm-up. An adaptive learning platform such as DreamBox or Smart Sparrow greets each student with 8–10 tailored exercises. The system has already crunched last night’s homework, dynamically selecting problems that sit squarely in each learner’s zone of proximal development.

-

Instant formative feedback. As answers flow in, pattern-recognition models flag misconceptions—perhaps a persistent sign-error in algebra or misunderstanding of photosynthesis. The teacher dashboard lights up with colored alerts, letting the educator intervene with surgical precision instead of carpet-bombing the class with review material.

-

AI writing coach. During English period, a generative-language companion offers sentence-level suggestions, highlights logic gaps, and encourages rhetorical experimentation. Crucially, all revisions are logged, giving teachers a transparent window into the student’s creative process.

-

Automated assessment. When the bell rings, Gradescope’s computer-vision model has already graded short-answer quizzes. Turnaround time collapses from days to minutes, freeing teachers to plan deeper discussions rather than chase rubrics.

The result is a feedback loop that once belonged only to one-on-one tutoring. According to a meta-analysis by Sainaptic Research, adaptive platforms can cut the variance in class achievement by up to 30 percent when paired with teacher oversight【4】.

Behind the algorithms

The technical ingredients powering this revolution fall into three overlapping buckets:

• Content recommendation engines. Borrowing from Netflix, these systems compute a “knowledge state vector” for each learner and rank the next best activity. Bayesian Knowledge Tracing (BKT) has long been the workhorse, but transformer-based architectures are now overtaking it by capturing long-range dependencies across subjects.

• Natural-language feedback. Large language models (LLMs) such as Claude and GPT-4o are being fine-tuned on pedagogical dialogue to generate Socratic hints, rubric-aligned essay commentary, and even gentle motivational nudges.

• Computer vision in grading. For math and science, object-detection networks scan handwritten work, locating problem-by-problem reasoning steps. Unlike multiple-choice scoring, this yields a granular error profile that teachers can interrogate.

Training these models demands rivers of data—millions of anonymised question-response pairs, scanned worksheets, voice recordings. That data dependency leads directly to AI in education’s thorniest issues.

Policy, privacy, and equitable access

Every dorm-room entrepreneur dreams of building the “Spotify for learning,” yet the regulatory terrain remains unfinished.

• Data protection. Student records are protected by FERPA in the United States and by GDPR across Europe, but gray areas abound when biometric or behavioral data enter the chat. Some districts now require vendors to publish model-card style disclosures detailing what data is collected, how long it is stored, and who can audit the code.

• Bias and fairness. Just as hiring algorithms can reflect societal inequities, adaptive learning systems risk steering underrepresented students toward remedial tracks if the historical training data is skewed. Researchers at MIT have proposed counter-factual evaluation suites—essentially running the model twice with demographic attributes swapped—to spot disparate impact before deployment.

• The teacher’s role. Automation panic aside, early adopters report that AI rarely replaces instruction; it reallocates time. Boston’s Northeastern University, now piloting access to Anthropic’s Claude for 49 000 students, found that faculty spent 26 percent less time grading but 18 percent more time in small-group mentoring sessions【3】.

• Infrastructure gaps. A rural district without reliable broadband cannot tap a cloud-hosted adaptive engine. Low-resource solutions—edge inference on cheap Chromebooks, or SMS-based tutoring in sub-Saharan Africa—remain an urgent research frontier.

Human intelligence in the loop

Ed-tech history is littered with shiny tools that gathered dust once novelty faded. The difference this time hinges on thoughtful integration. Schools reporting the highest gains share three cultural patterns:

-

Professional development is continuous. Teachers receive not a one-off webinar but ongoing coaching on prompt engineering, dashboard interpretation, and ethical guardrails.

-

Students are co-designers. Instead of banning AI writing aids, classrooms establish “AI disclosure statements” where learners annotate which suggestions they accepted and why. This metacognitive step turns a potential shortcut into a lesson on critical thinking.

-

Evaluation is rigorous. Learning gains, engagement metrics, and equity outcomes are tracked against control cohorts, mirroring clinical-trial discipline. When an algorithm underperforms, it is retrained or retired—no sunk-cost sentimentality.

The road ahead

Looking toward 2030, three converging trends could make AI a default layer in global education:

• Edge AI hardware. Cheap neural accelerators built into tablets will allow real-time inference even without Internet access, shrinking the digital divide.

• Multimodal tutors. Models adept at processing text, speech, diagrams, and gestures will enable more natural, conversation-rich tutoring. Imagine a physics coach that watches a student build circuits on her desk and intervenes when a resistor is misplaced.

• Credentialing shake-up. As adaptive systems gather fine-grained mastery data, universities and employers may lean less on one-shot exams, favouring longitudinal skill graphs exported from classroom AI.

Yet the future is not pre-written in code repositories. Societies must decide how much agency, transparency, and public funding these systems merit. The most important question is not “Will AI teach our children?” but “Who will decide how it teaches them, and to what ends?”

History suggests that when technology meets pedagogy, the winners are rarely the devices—they are the humans who learn to wield them wisely. If we keep educators, students, and communities firmly in the loop, the code coursing through tomorrow’s classrooms could become less a black box and more a beacon lighting every learner’s path.

Sources

- CEOs push states to require AI and computer-science for high-school graduation – Axios.

- China to rely on AI for sweeping education reform – Reuters.

- Northeastern University partners with Anthropic to pilot Claude in higher education – Axios Boston.

- “AI in Education Trends 2024: What Teachers Need to Know” – Sainaptic Research.